How to enhance video quality through AI?

Last updated on:3 months ago

Video enhancement encompasses a variety of tasks such as super-resolution, deblurring, denoising, inpainting, frame interpolation, and improving high dynamic range. In addition to enhancing the quality of individual video frames, it is also essential to address the motion information between frames through techniques like motion estimation and motion compensation.

Introduction

Video enhancement techniques can improve visual quality and those that focus on technical aspects. Conventionally, visual enhancements include sharpening/deblurring, noise reduction/denosing, colour correction, and upscaling/super resolution. Technical enhancements involve stabilisation (e.g., HDR (high dynamic range)), deinterlacing, and frame interpolation.

Most recently, video enhancement includes inpainting with the developing of deep learning and generative AI.

Video enhancement refers to techniques used to improve the visual quality of videos, making them clearer, more detailed, and more enjoyable to watch.

Applications

- Improve the performance of low-quality

- Medical imagery reconstruction

- Small object analysis in remote sensing

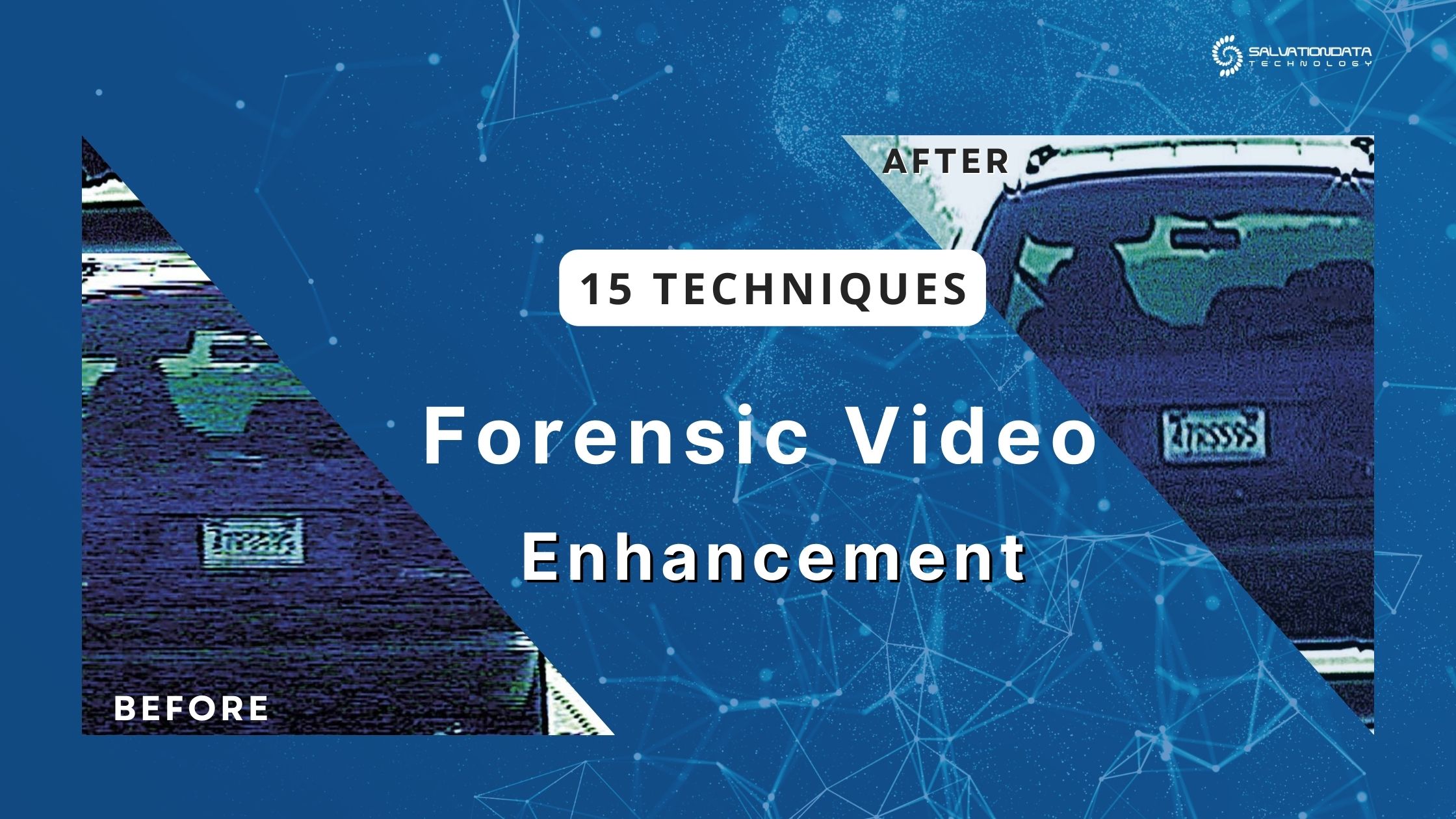

- Surveillance systems

- High-definition television

Super resolution (SR)

Upscaling increases the resolution of a video, making it sharper and more precise, especially on larger screens.

Check How to promote low-resolution video to super-resolution video? for details.

Deblurring

Sharpening the video reduces blurriness and improves details in the video.

Ensure correction algorithms do not introduce softness.

Check How to denoise and deblur videos? for details.

Denoising

Noise reduction minimise visual noise or grain, resulting in a clearer image.

Temporal noise may come from image and video encoding artefacts and sensor noise.

Check How to denoise and deblur videos? for details.

Frame interpolation

Frame interpolation increases the frame rate of a video by generating new frames, creating smoother slow-motion effects.

Need precise motion estimation and compensation models.

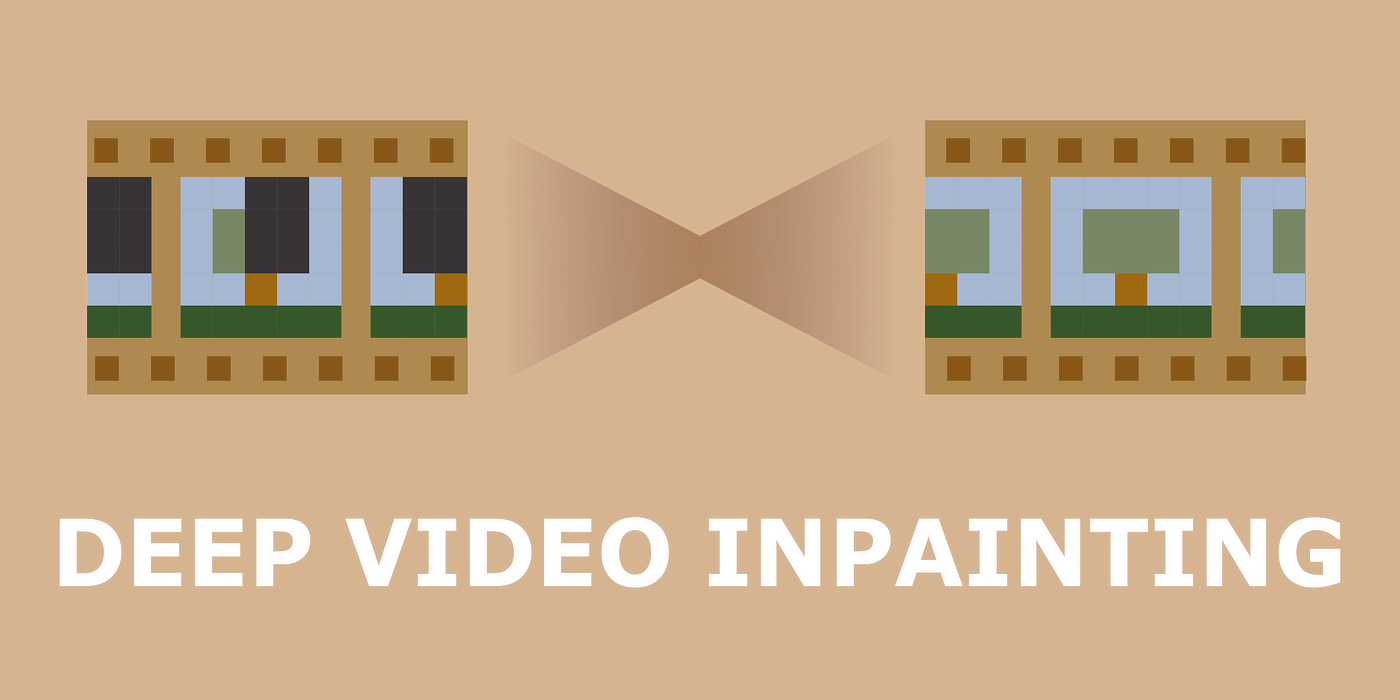

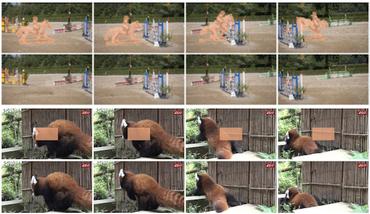

Inpainting

Video inpainting is the process of filling in missing or corrupted areas of a video sequence with plausible content. It aims to reconstruct or restore videos by “painting” over masked or damaged regions, making the footage look complete and natural.

The goal of video inpainting is to fill in missing regions of a given video sequence with both spatially and temporally coherentcontents.

masked auto encoder (MAE) can be a great backbone for the inpainting task since it is trained to recover the masked regions.

High dynamic range

HDR enhancement improves the dynamic range of the video, displaying more details in both shadows and highlights.

Reference

- Liu, H., Ruan, Z., Zhao, P., Dong, C., Shang, F., Liu, Y., Yang, L. and Timofte, R., 2022. Video super-resolution based on deep learning: a comprehensive survey. Artificial Intelligence Review, 55(8), pp.5981-6035.

- Su, H., Li, Y., Xu, Y., Fu, X. and Liu, S., 2025. A review of deep-learning-based super-resolution: From methods to applications. Pattern Recognition, 157, p.110935.

- Jain, V., Wu, Z., Zou, Q., Florentin, L., Turbell, H., Siddhartha, S., Timofte, R., Gao, Q., Jiang, L., Luo, Q. and Song, J., 2025. NTIRE 2025 challenge on video quality enhancement for video conferencing: Datasets, methods and results. In Proceedings of the Computer Vision and Pattern Recognition Conference (pp. 1184-1194).

- Dosovitskiy, A., Fischer, P., Ilg, E., Hausser, P., Hazirbas, C., Golkov, V., Van Der Smagt, P., Cremers, D. and Brox, T., 2015. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (pp. 2758-2766).

- ClementPinard/FlowNetPytorch

- Optical Flow: Predicting movement with the RAFT model

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!