Some small bugs and features of Deep learning

Last updated on:2 years ago

Sometimes, basic concepts are important for us to learn deep learning. I would collect the bugs and features of deep learning in this blog.

Feautures

Return index of resampled dataset

Can you check the under sampling methods and more precisely the parameter return_indices which by default is False and can be set to True. It will allow to return the associated indices as you need for most of the under sampler

Reference:

return index of resampled dataset

Pytorch resize and scale

torchvision.transforms.Resize(*size*, *interpolation=<InterpolationMode.BILINEAR: 'bilinear'>*, *max_size=None*, *antialias=None*)Resize the input image to the given size. If the image is torch Tensor, it is expected to have […, H, W] shape, where … means an arbitrary number of leading dimensions

Reference:

View(.size())

x.size(0) means x.shape[0]Allows us to do fast and memory efficient reshaping, slicing and element-wise operations.

Reference:

Bugs

init() got multiple values for argument ‘BNorm’

輸入參數順序錯誤,把BNorm初始化加多了一個

conda : The term ‘conda’ is not recognized as the name of a cmdlet, function, script file, or operable

conda : The term 'conda' is not recognized as the name of a cmdlet, function, script file, or operable program. Check the spelling of the name, or if a path was included, verify that the path is correct andWaite a minute and try again.

The efficientnet paper insists efficientnet-b0 is 0.39 Gflops, but the measurement is 00.2Gflops

from pytorchcv.model_provider import get_model as ptcv_get_model

net = ptcv_get_model("efficientnet_b0", pretrained=True)

get_model_complexity_info(net, (3, 224, 224), print_per_layer_stat=False)

\# ('0.4 GMac', '5.29 M')This implementation of efficient net uses F.conv2d to perform convs. Unfortunately ptflops can’t catch arbitrary F.* functions since it works via adding hooks to known children classes of nn.Module such as nn.Conv2d and so on. If you enable print_per_layer_stat you’ll see that Conv2dStaticSamePadding module is treated as a zero-op since there is no handler for this custom operation (which is actually implemented via call of F.conv2d). That’s why ptflops reports less operations than expected.

Reference:

The efficientnet paper insists efficientnet-b0 is 0.39 Gflops, but the measurement is 00.2Gflops

Python decorator not working for some .py file

@register function

Must be import before other function call register. Otherwise, unimported function cannot be registered.

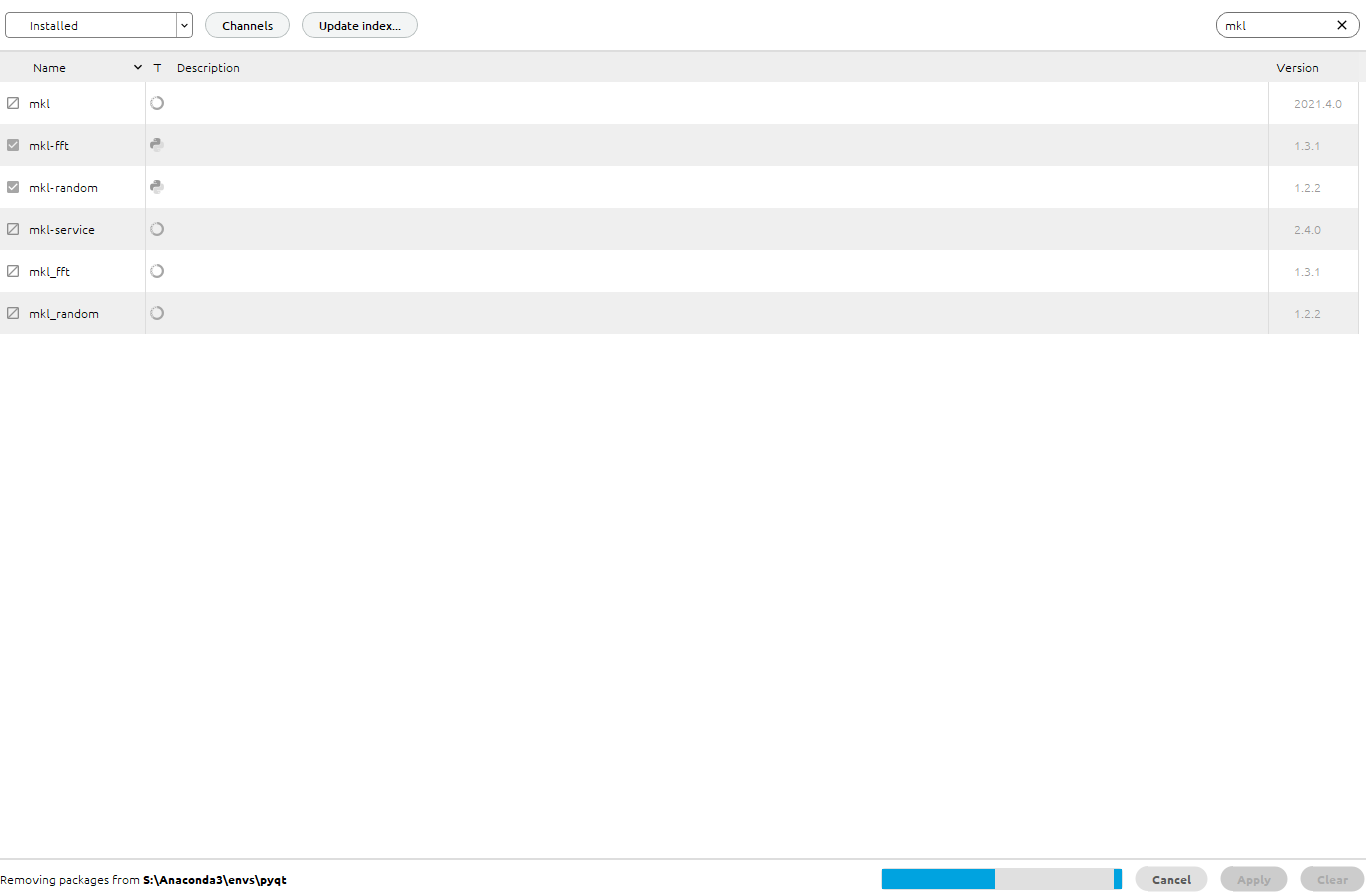

OMP: Error #15: Initializing libiomp5md.dll, but found libiomp5md.dll already initialized.

OMP: Hint This means that multiple copies of the OpenMP runtime have been linked into the program. That is dangerous, since it can degrade performance or cause

incorrect results. The best thing to do is to ensure that only a single OpenMP runtime is linked into the process, e.g. by avoiding static linking of the OpenMP runtime in any library. As an unsafe, unsupported, undocumented workaround you can set the environment variable KMP_DUPLICATE_LIB_OK=TRUE to allow the program

to continue to execute, but that may cause crashes or silently produce incorrect results. For more information, please see http://www.intel.com/software/products/support/.remove mkl in anaconda.

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!