How to auto generate sitemap and robots.txt for your web page powered by hexo

Last updated on:2 years ago

It is annoying that if we have to create robots.txt and sitemap.xml manually every time. Luckily, if your personal page is powered by hexo, you can generate it by programmes. I will show you how to realize it.

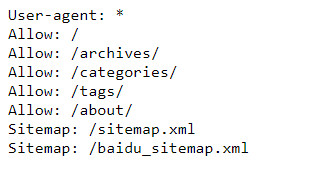

robots.txt

Install

npm install hexo-robotstxt-multisitemaps --saveEnable

Add hexo-generator-robotstxt to plugins in _config.yml.

plugins:

- hexo-robotstxt-multisitemapsAdd config for robots.txt to _config.yml.

robotstxt:

useragent: "*"

disallow:

- /one_file_to_disallow.html

- /2nd_file_to_disallow.html

- /3rd_file_to_disallow.html

allow:

- /one_file_to_allow.html

- /2nd_file_to_allow.html

- /3rd_file_to_allow.html

sitemap:

- /sitemap.xml

- /baidu_sitemap.xmlReference

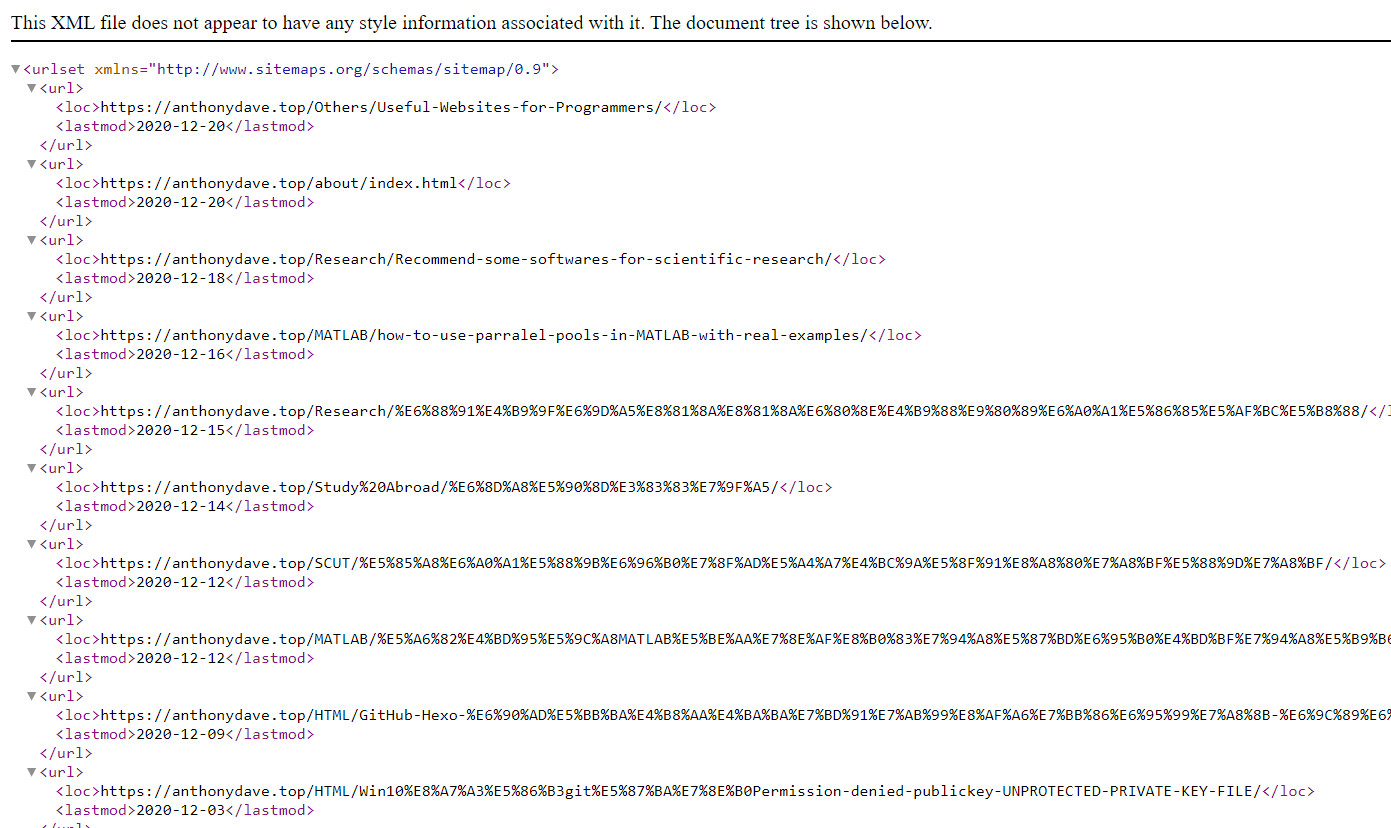

sitmap.xml

Install

$ npm install hexo-generator-sitemap --save- Hexo 4: 2.x

- Hexo 3: 1.x

- Hexo 2: 0.x

Options

You can configure this plugin in _config.yml.

sitemap:

path: sitemap.xml

template: ./sitemap_template.xml

rel: false

tags: true

categories: true- path - Sitemap path. (Default: sitemap.xml)

- template - Custom template path. This file will be used to generate sitemap.xml (See default template)

- rel - Add

rel-sitemapto the site’s header. (Default:false) - tags - Add site’s tags

- categories - Add site’s categories

Exclude Posts/Pages

Add sitemap: false to the post/page’s front matter.

---

title: lorem ipsum

date: 2020-01-02

sitemap: false

---Reference

baidu_sitemap.xml

Go to hexo-generator-baidu-sitemap to learn how to set up the environment. The steps are similar.

Test it

Now, by hexo clean && hexo d, you can check the txt and xml files, like

https://anthonydave.top/robots.txt

https://anthonydave.top/sitemap.xml

https://anthonydave.top/baidu_sitemap.xml

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!